Published on Wednesday, 14 Feb, 2018

Audits as a strategic tool

This is the second long-form post in the Records Sound the Same 12 months of digital transformation series. In this article we’re going to be talking about the idea of assessing where you are in order to set strategic direction, help identify opportunities, and spark change.

Over the years, working with people in very different environments I’ve frequently heard the phrase “if you can’t measure it, you can’t manage it” roll off tongues. Targets, and measurements against them are commonly the way that companies decide how well they’re doing or act to change direction. Having an accurate, informed measurement of current status is useful when we’re planning how to do that thing better in the future.

But what does that mean when we apply it specifically to the world of digital?

Most digital projects that I work on involve some degree of audits, where there’s work to capture the current state of play of different areas. These can be broad, but include activities like expert reviews of websites, capturing the ecosystem of systems and technologies in use, or mapping processes in order to work out where the web can help.

The goal is that by understanding the realities of we’re at now, we can do even better in the future.

Later in this series of posts we’re going to look at some of the above types of review in more detail, but in this instance we’re going to zoom right out to get the big picture, and look at how undertaking an audit of how you’re working with digital can help strategically.

Things to think about

Let’s start with the basics of what we’re talking about. Google’s definition of an audit is “an official inspection of an organization’s accounts, typically by an independent body.” With our transformation strategic hat on (feel free to send in a postcard with a drawing of what this should look like, dear readers), we’re looking to inspect not financial accounts, but our relationship with digital, technologies, and all that comes under this umbrella.

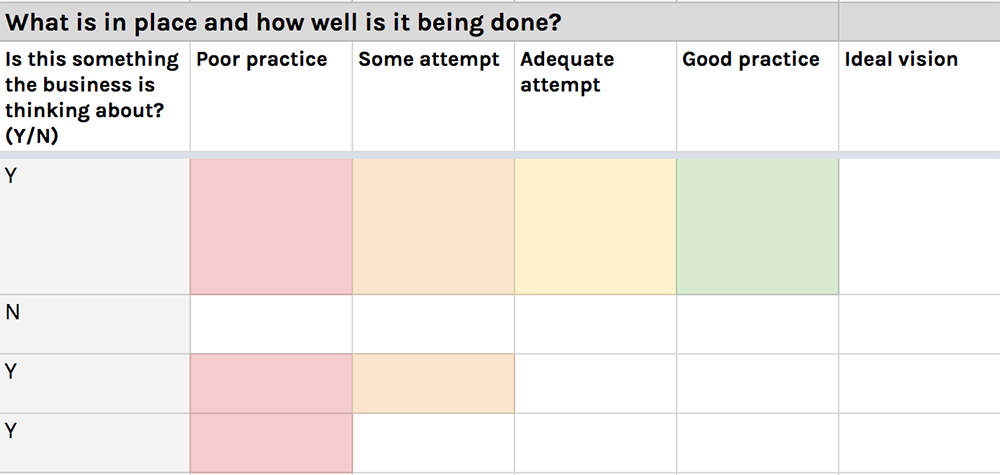

This will involve defining a set of different areas to review, looking at whether each one is being considered at the moment, and if so how well it’s being done. Within my reviews, I tend to focus on areas under the topics of systems and technology; processes; people, teams and culture; strategy, vision, and principles. These are more inter-linked than they may be at first glance, and the findings in one area can actually be useful to understanding the others - it’s important to think holistically when considering changes to a strategy.

These kind of assessments go by many names - for instance labels like ‘digital maturity audit’ are quite common. Despite using it in the title of this post, I’m not actually keen on the word ‘audit’, as I find that it comes with negative connotations for clients, and most importantly the people you may be working with to get a feel for where any of the company’s gaps are. Rather than understanding that this is a test of what can be done better as an organisation, individuals tend to associate the term with checking whether they personally aren’t complying, or are breaking rules, which can lead to some hostility. Likewise, ‘maturity’ doesn’t always feel right for me - your organisation can be digitally mature and yet do certain things well or badly. Instead, I tend to refer to undertaking a digital capability review - ‘capability’ referring to what’s possible at present and where it’s realistic to get to, and ‘review’ encouraging a repeat activity.

Like in the definition of an audit, it’s also worth considering whether you can get an independent body to carry the review out, so that you can assess everything with as little bias and pre-conceptions as possible. This could be someone from outside of your organisation, who’s seen a range of people’s work with digital and can quickly spot areas of improvement (👋). However, that’s not always possible.

If you’re not able to get outside help, you’ll need someone on the inside who’s specifically tasked with shrugging off previous affiliations and taking a fresh look at the situation (note that this is often harder than it sounds!). If this is your approach, ensure that you have advance buy-in for this throughout the business - there is no point in your reviewer ensuring they’re fully free from bias and the shackles of their previous experiences, only for senior management to discount the recommendations “because they’ve come from Diana in IT - that lot are always sending us unrealistic suggestions.”

Why audits are useful strategically

At the end of your review process, your findings should mean that your organisation is in a position to:

- check that where you actually are matches where you think you are.

- quickly draw attention to problematic or well-performing areas.

- identify areas of opportunity.

- start further conversations.

- use the above to help inform your strategy for the future - how you’re going to make change happen.

The point about conversations is particularly key. The review will deal more in terms of ‘whats’ and ‘hows’, and won’t necessarily be able to uncover all of the whys - these are better picked apart by thinking about root causes (which we’ll look at in more detail in June) - or provide immediate solutions. At the end of (or even during) the review you should be prepared to have some potentially difficult and complex conversations about what’s been found, and then start to dig deeper into some aspects.

What a review will do is tell you what’s happening, and how well. You’ll gain a bird’s eye view of different areas, all of which add up to your overall relationship with digital and technology. Whilst there may be opinions within the business of what is going well, what could be better, or where there are some opportunities, a review will help to ground these, capture reality, and present findings for people to quickly digest.

As I alluded to when talking about naming, reviews can also be useful to track progress and action over time. How long have problems been going on for? Are they actually improving? By standardising the set of areas that you look at, you can see the evolution (or otherwise!) of points of focus.

Because this, of course, is our ultimate goal. By gaining all of the facts, we can then use these to help set our strategy. We’ll need to think about our priorities (the March article will cover prioritisation methods), what’s realistic, and what will make a difference.

But we’ve talked too long. Let’s put everything together into a step-by-step process that you can follow.

Carrying out your digital capability review

Our review will typically have three main parts: before, during, and after.

Before

1) Get buy in, decide the scope, talk about a plan for the ‘after’ part.

2) Choose your reviewer.

3) Based on the scope, set out your assessment criteria.

These will be unique to each situation, but below is a link to a set of examples for you to build on. What you want to do here is to ensure each element being reviewed has a clear progression of gradings working up to your definition of what good looks like, and ultimately your ideal vision.

For example, one area that you may look at is how the business stores customer details:

- At one end of the scale may be paper-based records.

- The next level may be staff holding information individually (locally shared spreadsheets).

- Next could be collaborative, cloud-based spreadsheets.

- You may consider good practice to be using a dedicated CRM system.

- Your ideal vision may be to store customer data in specialist CRM software, with an API available to surface it to an area on your website where customers can directly update their information and preferences.

I don’t suggest using any more than 5 steps for this, but the number that you settle on should work well when all of the areas being assessed are considered.

4) Plan how you will get this information.

Who do you need to speak to, what do you need to review?

During

5) Identify whether each of your review points are being considered at the moment.

Make this yes or no - no ‘sort of’s allowed, as we’ll cover this in the next step.

6) How well is each one being done?

This is where we see how far up the ideal ladder we can tick.

7) Capture additional notes.

Aim to capture the rationale and examples behind each classification you make - this will come in useful when you’re feeding back. Other suggestions or recommendations may crop up during your investigations, and these are important not to lose.

8) Decide how the information needs to be presented.

Visual is good. If you’re using a template similar to the spreadsheet above, you’ll automatically end up with a visualisation of sorts, but radar charts (illustrated below) are also often a good bet.

After

9) Feed back to the business.

This is where the discussion starts, but try to avoid finger-pointing. Keep that neural perspective that served you well throughout doing the review. Be prepared to explain the rationale behind why you’ve scored things the way that you did (here’s where the notes come in handy). If you have a previous review(s) to compare with, bring up any important comparisons with results from the past.

What you’ll find, especially if you’ve visualised the results, is that there should be a clear shape of how you’re doing. A key point here is that it’s extremely unlikely that you’ll be getting top marks across the board, and that the whole point of this exercise is that you’re looking for opportunities to be better - embrace those lower scores! Maybe you’re average across the board, in which case you’ll have a range of options to focus on. Maybe you’re almost there in one area, and you want to do a final push of improvements before looking at other things. Or perhaps there’s an enormous set of red flags that you had no idea about, and everyone’s got to jump on them.

What this exercise will do is to provide a framework for you to surface the status of different points, capture evidence around them, and give people a central communication aid to refer to and rally behind.

10) Where you have suggestions for improvements, make them

11) Undertake a group impact/effort/prioritisation activity for problem areas, or areas of opportunity. (We’ll look at this in March)

12) Decide how many areas are realistic to address in the next 3 months, 6 months, year, and allocate them to a timeframe.

13) Update your strategy and make a commitment to follow up activities or actionable next steps to make the change happen.

14) Schedule your next review!

Applying this to your own situation

As with most activities that we’re going to be doing, you’ll need to customise the suggestions here to your situation. For very large businesses you may find that there’s huge disparity between departments, and that the results start to become too complicated. You can either cover this with smaller reviews to feed into an overall picture, or by catering for it by extending the criteria that you use to be a bit more conditional.

Whilst this post focuses on use by businesses and other organisations, individuals can also use these principles to help you with your own strategy - you can keep the categories, but tweak the points.

Whether you’re looking to carry out a full-blown official review or something a little bit more light touch, I’d like to set the following as this month’s activity:

1) Thinking about your situation and using the above spreadsheet as a starting prompt, collect together a set of review points under each of the headings below.

- Systems and technology

- Processes

- People, teams, and culture

- Strategy, vision, and principles

2) Get someone else to look at your points - do they make sense? Is there anything that’s missing?

3) Think about what this tells you about the organisation, and consider carrying out a full review using the review points you’ve collected.

And finally…

Whilst the spreadsheet above contains some examples of the kind of areas that I typically look at when undertaking a digital capability review for my clients, it’s not my full set of points and isn’t reflective of variants for different industries, technology focus, or level of detail needed.

The plan is to create an online tool to better support clients and other people who may want a light-touch way to reflect on areas where they may be able to make improvements. Stay in touch if you’d like to hear more - email hello@recordssoundthesame.com with a bit about your situation and any suggestions for what you’d like to see if you’d like to be kept in the loop!

Posted by Sally Lait

Sally is the lead consultant and founder of Records Sound the Same, helping people with digital transformation. She's also a speaker, coder, gamer, author, and jasmine tea fiend.

Sally Lait

Sally Lait